CARPE Blog Posts

ATP/AERA Blog Post - Gemma Cherry and Conor Scully, May 25th, 2022.

In the Spring of 2022, CARPE Prometric Post-Doctoral Researcher Dr. Gemma Cherry and CARPE PhD candidate Conor Scully attended the Association of Test Publishers (ATP) Innovations in Testing conference, Orlando Florida, and the American Educational Research Association (AERA) conference, San Diego California. This blog post highlights some of their key takeaways in relation to the latest trends and innovations in the field of educational assessment.

ATP

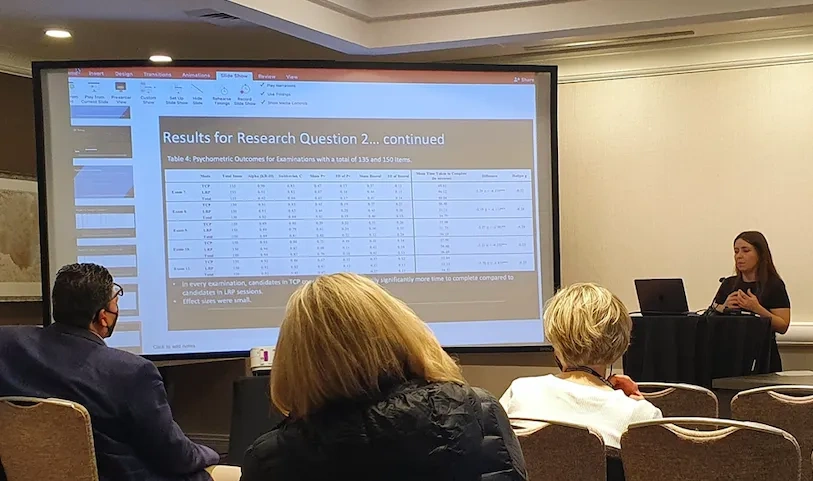

The ATP 2022 conference theme was: Bridging Opportunities for Better Assessment, with many of the presentations focusing on how the assessment industry can learn, adapt, and grow in light of lessons learned from the Covid-19 pandemic. Of key interest to conference attendees was how issues of diversity and inclusion can be moved forward. The ATP conference brought together industry leaders and various discussions were held surrounding the rapidly changing nature of assessment. Of particular interest to Gemma and Conor were presentations focusing on post-covid assessment environments. At the conference Gemma, along with Prometrics’ Dr. Li Ann Kuan gave a presentation on the psychometric comparability of remote proctoring and in-centre modes of assessment administration. This presentation was very well received by delegates and generated a significant amount of discussion regarding the promising results.

Remote proctoring was frequently mentioned at ATP and while this is not a new topic, the context in which this mode of administration is operating has significantly changed. It is clear that remote proctoring is here to stay and it is likely that, going forward, a hybrid option will be available to test takers who can choose to sit their examinations at either a traditional testing centre or remotely. A question of particular relevance asked at the ATP conference was, what role will paper-based testing play in the future? It was highlighted that in some instances, paper-based assessments may still be the optimal method for assessment to be fully accessible and inclusive. A number of speakers said that it would be important for the testing industry to be proactive and to put plans in place to ensure accessibility, as opposed to being reactive to challenges as they arise (as happened during the pandemic). While remote proctoring has become a standard offering, it remains to be seen how it can be adapted to ensure accessibility and appropriateness for all test takers. Strong arguments were made at various sessions that we should not become complacent about modal comparability despite the positive outcomes from research indicating that remote proctoring outcomes are similar to those observed for test centres.

AERA

The theme for AERA 2022 was Cultivating Equitable Education Systems for the 21st Century. A significant number of the talks were centred on the theme of diversity, equality, and inclusion (DEI), which was also a major theme at ATP. Issues of social justice within education are more relevant than ever, and numerous discussions took place relating to the impacts of race, gender, and sexuality in relation to educational outcomes. In addition to scheduling panels and papers on this issue, the conference organisers also made sure to elevate the voices of people belonging to minority groups, with the welcome spectacle of panels discussing the experiences of black Americans within the education system comprised entirely of black men and women.

Gemma was lucky enough to have two presentations over the course of the AERA conference, one relating to her work with CARPE (on remote proctoring) and the other focusing on her doctoral work in which she discussed educational inequalities across urban and rural locations from an intersectional perspective.

Despite reduced in-person attendance due to the impact of Covid-19 (participants had the option to participate virtually), the conference schedule was packed with on-site papers, panels, and roundtables. At any point there were approximately sixty sessions taking place! As a result, Conor and Gemma had to be strategic and organised about what to attend. Conor focused his time on discussions relating to mixed methods research (which is relevant to his doctoral work) and LGBTQ+ issues, while Gemma attended multiple sessions on remote proctoring and rural education (her PhD topic).

In addition to attending sessions, the conference also provided an opportunity to meet and socialise with other young researchers, and to see the sights of San Diego.

A particular highlight was a dinner organised by Dr. Larry Ludlow of Boston College and attended by numerous current and former students of BC (including CARPE Director, Michael O’Leary who completed his PhD in BC in 1999).

Attending these conferences gave Gemma and Conor the opportunity to network with experts in the assessment and wider educational research fields. Their experiences have proved to be very beneficial to their work at CARPE and they can now bring new insights, understandings and perspectives to current trends and innovations in assessment. Both are very grateful for the funding provided by Prometric which made all of this possible.

Can Children's Concentration Be Improved Using Cognitive Training? - Éadaoin Slattery, February 28th, 2022.

In school, children are required to listen to the teacher, follow instructions and complete independent work. These requirements rely on children’s ability to pay attention over time. Researchers call this type of attention, sustained attention. It is commonly referred to as ‘concentration’ or ‘focus’ in everyday life. In school, the importance of sustained attention for learning has been demonstrated in studies that show an association between students’ sustained attention and their academic achievements as well as their classroom behaviour. However, despite its importance, sustained attention difficulties are a common problem in children with as many as 24% of children exhibiting poor concentration. Attentional problems compromise academic achievement. This evidence highlights the strong need to develop evidence-based interventions to enhance students’ sustained attention.

In recent years, cognitive attention training has been identified as a potential intervention to enhance sustained attention. This type of training is sometimes called brain training. Training involves the repetitive practice of a cognitive task design to exercise parts of the brain related to attention. The current study sought to evaluate the efficacy of a school-based attention training programme, Keeping Score!, in improving students’ sustained attention. Training was based on silently keeping score during a fast-paced game of table tennis, which required children to exercise their sustained attention. To test the impact of training, we conducted a small scale randomised controlled trial. Randomised controlled trials are regarded as the gold standard for evidence efficacy. In the study, we assigned children to either a training group or a control group. Both groups received 3 training sessions per week for 6 weeks. The control group completed the same activity as the training group except they were not required to mentally keep score; the score was called out by the researcher as each point was won. We measured sustained attention before training, immediately following training and at a 6-week follow up period. Contrary to our expectations, we found no improvements in sustained attention following training.

The obvious question is why were no improvements found in sustained attention? There are various reasons that could potentially explain the null findings such as our low training duration and sample size. Another potential reason that we argue in the paper is perhaps cognitive attention training is not sufficient to enhance sustained attention. This is because our capacity to sustain attention at any moment is determined by an interplay of cognitive, emotional, arousal and motivational factors. Cognitive attention training primarily targets the cognitive factors. Other interventions that target the multiple factors underlying sustained attention such as mindfulness training may have more success in improving students’ ability to pay attention.

So, can concentration be improved using cognitive training? The answer is not a simple yes or no. This study suggests that it is very difficult to enhance students’ ability to pay attention using cognitive training methods.

The paper from this study is currently under review. Please email Eadaoin (eadaoin.slattery@dcu.ie) for a study preprint.

References

Döpfner, M., Breuer, D., Wille, N., Erhart, M., & Ravens-Sieberer, U. (2008). How often do children meet ICD-10/DSM-IV criteria of attention deficit-/hyperactivity disorder and hyperkinetic disorder? Parent-based prevalence rates in a national sample–results of the BELLA study. European Child & Adolescent Psychiatry, 17(1), 59-70.

Rabiner, D. L., Murray, D. W., Skinner, A. T., & Malone, P. S. (2010). A randomized trial of two promising computer-based interventions for students with attention difficulties. Journal of Abnormal Child Psychology, 38(1), 131-142.

Slattery, E. J., Ryan, P., Fortune, D. G., & McAvinue, L. P. (2022). Unique and overlapping contributions of sustained attention and working memory to parent and teacher ratings of inattentive behavior. Child Neuropsychology, 1-23.

Steinmayr, R., Ziegler, M., & Träuble, B. (2010). Do intelligence and sustained attention interact in predicting academic achievement? Learning and Individual Differences, 20(1), 14-18.

Does the use of multimedia in test questions affect the performance and behaviour of test takers? - Paula Lehane, September 1st 2021.

While paper-based assessments are largely restricted to traditional multiple choice or short answer questions, the range of items possible for digital assessments are more extensive and continue to expand as technology develops. In particular, the use of static (e.g. high-definition images), dynamic (e.g. videos, animations) and interactive (e.g. simulations) multimedia stimuli has allowed test developers to reimagine what knowledge, skills and abilities that can be assessed (Bryant, 2017). Therefore, it is hardly surprising that education systems around the world are now attempting to devise their own digital assessments for post-primary students (e.g. Ireland, New Zealand). Unfortunately, while there is a broad faith that these digital assessments can improve the quality and scope of the testing process, the exact nature of this added value, if it exists, has yet to be properly described or verified (Russell & Moncaleano, 2019). To begin to address this research gap, two related research studies were undertaken to investigate the extent to which the use of different multimedia stimuli can affect test-taker performance and behaviour.

For Study 1, an experiment was conducted with 251 Irish post-primary students using an animated and text-image version of the same digital assessment of scientific literacy. Eye movement and interview data were also collected from subsets of these students (n=32 and n=12 respectively) to determine how differing multimedia stimuli can affect test-taker attentional behaviour. The results indicated that, overall, there was no significant difference in test-taker performance when identical items used animated or text-image stimuli. In contrast, the eye movement data collected revealed practical differences in attentional patterns between conditions. This finding indicates that the type of multimedia stimulus used in an item can affect test-taker attentional behaviour without necessarily impacting overall performance. This is significant as understanding how stimulus modality can affect test-taker behaviour will ultimately support the quality of inferences that can be drawn from digital assessments.

Study 2 involved 24 test-takers completing a series of simulation-type items where they generated their own data to answer the test questions. Eye movement, interview and test-score data were gathered to provide insight into test-taker engagement with these items. Based on the data gathered, increasing test-taker familiarity with simulation-type items can affect test-taker attentional behaviour leading to more effective test-taking strategies. Furthermore, the data revealed that successful test-takers directed significantly more of their attention to the relevant areas of the simulation output. However, generating large volumes of data can disrupt the predictive properties of these behaviours. Examination of other process data variables (e.g. time-on-task, number of simulations run) showed that some of the most common interpretations ascribed to frequencies of these behaviours (e.g. Grieff et al., 2015) are context and subject specific.

Taking into consideration the recent initiatives involving digital assessments for the Leaving Certificate Examination (State Examination Commission, 2021) as well as the ‘Digital Strategy for Schools’ (Department of Education and Skills, 2021), the findings of this research will be particularly pertinent to Irish educational policy makers. However, they also have relevance well beyond the Irish context. In particular, this research provides test-developers worldwide with insights as to how item features and test-taker attentional behaviours influence the psychometric properties of assessments and the inferences drawn from the data they provide.

Reference:

Lehane, P. (2021). The Impact of Test Items Incorporating Multimedia Stimuli on the Performance and Attentional Behaviour of Test-Takers (PhD Thesis). Institute of Education: Dublin City University, Ireland.

Other references:

Bryant, W. (2017). Developing a Strategy for Using Technology-Enhanced Items in Large Scale Standardized Tests. Practical Assessment, Research & Evaluation, 22(1), 1–5. https://scholarworks.umass.edu/pare/vol22/iss1/1/

Department of Education and Skills (DES). (2021). Digital Strategy for School Consultation Framework [Website]. https://www.education.ie/en/Schools-Colleges/Information/Information-Communications-Technology-ICT-in-Schools/digital-strategy-for-schools-consultation-framework.html

Greiff, S., Wüstenberg, S., & Avvisati, F. (2015). Computer-generated log-file analyses as a window into students' minds? A showcase study based on the PISA 2012 assessment of problem solving. Computers & Education, 91, 92– 105. https://doi.org/10.1016/j.compedu.2015.10.018

Russell, M. & Moncaleano, S. (2019). Educational Assessment Examining the Use and Construct Fidelity of Technology-Enhanced Items Employed by K-12 Testing Programs. Practical Assessment, Research & Evaluation, 24(4), 286-304. https://doi.org/10.1080/10627197.2019.1670055

State Examination Commission. (2021). Leaving Certificate Computer Science. https://www.examinations.ie/?l=en&mc=ex&sc=cs

Inside the Black Box of the Objective Structured Clinical Examination - Conor Scully, August 4th 2021.

The Objective Structured Clinical Examination (OSCE) is an assessment format common in the health sciences, and nursing in particular. In an OSCE, a student moves through an exam hall, completing a series of “stations” at which they undertake a specific task or series of tasks, such as measuring a patient’s vital signs. Students are judged at each station by an expert examiner, who awards them marks on the basis of a marking guide that has been designed for that station. A key advantage of the OSCE is that all students complete the same stations, and are judged according to the same set of criteria.

Because of the standardisation inherent in the OSCE, it is generally thought to produce consistent scores that can be used to make accurate decisions about students’ relative performance levels; as such, it is frequently used as a summative assessment in undergraduate nursing programs, to determine whether a student has demonstrated sufficient mastery of the curriculum to progress to the next year of study. However, as with all forms of assessment, it is important to document evidence that decisions made on the basis of OSCE data are not only reliable (consistent), but most importantly, valid (accurate).

Assessor cognition is a relatively recent field of inquiry that seeks to understand the processes by which examiners of performance assessments come to make judgements about students. In theory, it should be possible to ensure that all examiners interpret the marking guide in exactly the same way, such that the only factor affecting the scores awarded to students is their different ability levels. However, research has consistently shown that assessors bring with them a range of individual factors that influence how they interpret student performances, and that even rigorous professional development and training is not enough to bring about complete agreement regarding a student’s performance.

As it stands, the cognitive processes assessors employ when coming to make judgements about nursing students are unknown, and something of a “black box”. Research on assessors in medicine has indicated that they lack a fixed sense of what constitutes “good” performance, and are therefore likely to judge students against their own subjective ideas about competence; tend to focus on different aspects of performance when determining whether a student is “good”; and have difficulty translating the verbal descriptions about performance into a numerical score. However, it is unclear whether nursing assessors judge students in the same way as their counterparts in other medical fields.

This lack of clarity concerning nursing assessors’ judgement processes represents a possible threat to the reliability of nursing OSCE scores, as individual students’ scores may fluctuate on the basis of who happens to be assessing them, rather than on their ability. Indeed, research on the reliability of nursing OSCE scores has suggested that it is often at a less than desirable level. As such, opening the “black box” of nursing assessor judgements should lead to a better understanding of how judgements are made, and, ultimately, a greater level of defensibility regarding decisions made on the basis of OSCE scores.

An empirical study about assessor cognition is currently being conducted by Conor Scully, Prometric PhD candidate at CARPE. For more information, please email conor.scully9@mail.dcu.ie.

The Educational Attainment of Pupils from Rural Locations and Schools in Northern Ireland - Gemma Cherry, June 29th, 2021.

In educational research literature across the world, those factors influencing pupils’ educational attainment outcomes are well documented e.g. gender, socioeconomic status (SES) and ethnicity. However, the issue of whether educational outcomes are influenced by the geographical location (urban-rural) in which students live and education takes place, has been much less focused on. Internationally, urban locations are taken for granted as the norm in research and are presupposed when nothing else is stated (Bæck, 2016). This presumption overlooks the fact that many children and young people live their lives in rural locations. This oversight has led to a lack of empirical evidence and understanding about how rural pupils may become educationally (dis)advantaged. This lacuna was the reason the research described here was undertaken. The study presents the first high-quality analysis of the relationship between rurality and educational attainment outcomes in the Northern Ireland (NI) context.

Prior to this study, in NI the only information available on the relationship between location and education were annual statistical publications. These publications, produced by the Department for Agriculture, Environment and Rural Affairs (DAERA), contained descriptive statistics on rural educational advantage and focused on the post-primary sector only. It was clear that more detailed and high-quality analysis was required to gain a better understanding of what lay beneath these statistics.

The study utilised a comprehensive data set covering both primary and post-primary educational stages that had heretofore never been available for research purposes. The primary data were provided by Granada Learning (GL) Assessment and were the first data made available in the NI context that relate to primary level pupils. The post-primary data set contained complete cohort information and, for the first time, matched the NI Census to the School Leavers Survey and the School Census.

The statistical method employed for the research was multilevel modelling, an approach that takes account of the fact that pupils are clustered within schools (Hox, 2010). The attainment outcomes used were English and maths at primary level and GCSE English, GCSE maths and the overall number of GCSEs achieved by pupils at post-primary level.

The results obtained from this research provide evidence that rurality has a statistically significant influence on primary pupils’ English attainment outcomes but not on their maths attainment. An overall rural advantage was found, however, interaction results revealed that this advantage was not experienced by every rural pupil. Rural boys from lower SES backgrounds were subsequently identified as a group of pupils who are at risk of lower English attainment at this level of education. Rurality was also found to have a statistically significant influence on all three attainment outcomes at post-primary level. Furthermore, the results show that pupils who attend non-grammar schools and those pupils from lower SES positions are more greatly influenced by their location than pupils attending grammar schools and those from higher SES backgrounds. For example, the urban-rural achievement gap (to the advantage of rural pupils) was not as apparent in grammar schools as it was in in non-grammar schools, where it was much wider.

This study is significant in being the first to identify previously overlooked groups of pupils in need of additional educational support in NI. The evidence presented will be directly relevant to policy initiatives and programmes aimed at raising educational outcomes for disadvantaged students in NI.

Reference:

Cherry, G. (2021). Inequalities in Educational Attainment across Rural and Urban Locations: Secondary Data Analysis of Pupil Outcomes in Northern Ireland. PhD Thesis. School of Social Sciences, Education and Social Work: Queen’s University Belfast.

Other references:

Bæck, U. K. (2016). Rural Location and Academic Success—Remarks on Research, Contextualisation and Methodology. Scandinavian Journal of Educational Research, 60(4), 435–448.

Hox, J. (2010). Multilevel Analysis: Techniques and Applications (2nd ed.). New York: Routledge.

The first CARPE Blog post is written by our Director, Professor Michael O'Leary. In this post, Michael discusses the Leaving Certificate 2020 Calculated Grades Survey.

Cancellation of the Leaving Certificate (LC) examinations in 2020 as a result of COVID-19 and the subsequent involvement of post-primary teachers in estimating marks and ranks for their own students as part of the Calculated Grades (CG) process were unique events in the history of Irish education. Following the publication of LC results, and completion of the appeals process, an online questionnaire survey of post-primary teachers was conducted in the closing months of 2020 that investigated how this cohort of teachers engaged with the process of CG in their schools and if this experience has impacted how they perceive their role as assessors. Preliminary findings based on the responses of 713 teachers are contained in a report available to download here. A complementary paper published in Irish Educational Studies can be accessed here.

As reported, data revealed that the teachers surveyed used a wide range of assessment information when estimating marks and ranks for their students. Not surprisingly, the outcomes from 5th and 6th year exams, as well as mock exams, were particularly important in informing teachers' judgements. In commentary, respondents also highlighted the importance of other sources of information including their professional knowledge and expertise in State Examinations, the use of in-school tracking and assessment records, the use of historical State Examinations performance data, as well as student characteristics such as application to their work. Challenges identified by many respondents when estimating marks and ranks for their students included issues related to decision-making around grade boundaries, combining qualitative and quantitative assessment data, reconciling inconsistencies in student performance, maintaining an unbiased position with respect to individual students and voicing concerns about how school colleagues arrived at their decisions. Overall, however, the majority of teachers indicated that they felt the alignment meetings worked well and expressed confidence in their professional judgements. Almost all felt that they were fair to their students.

The pressure felt from members of the school's community, as well as the stress caused by having to engage in the process, were clearly articulated by many. Decisions around the release of rank order data to students caused many to express very strong feelings of annoyance and disappointment. A large number of comments focused on issues of fairness related to conscious and unconscious bias, approaches adopted by colleagues, the application of the DES guidelines, the use of school historical data and the impact of the national standardisation process on the grades awarded to students. While many were adamant that they would not engage in a calculated grades process in the future, some took a more nuanced view indicating overall satisfaction with the process in the context of exceptional circumstances and highlighting the potential benefits it offered some students. Opinion was divided on the extent to which the CG experience would inform efforts to reform Senior Cycle.